-

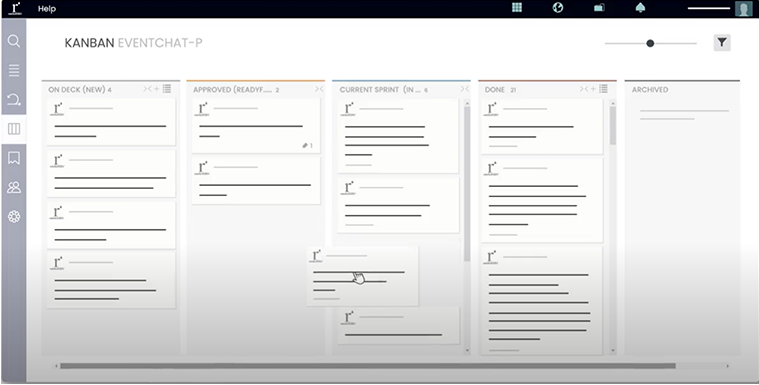

We develop groundbreaking technology

Our team of technologists, architects, product owners, and software engineers apply a multi-disciplinary approach, developing products from scratch or growing an existing technology. Our breadth of experience spans from simple application, API, and platform development across to AI/ML, blockchain, AR/VR, and metaverse technologies. Our teams ensure your company remains competitive in the global arena.

-

We strategize and execute on marketing

Our team focuses on insights carefully harvested from a data-driven marketing approach. Awareness and conversion are key when executing a successful marketing campaign and creating a self-actuating funnel strategy. Driven by Key Performance Indicators like acquisition cost, audience engagement, brand lift, and research ability quotients, Marketing Machine applies deep understanding to industry leading marketing practices.

-

We support with advising and consulting

Our operational consulting and advising are built from a deep understanding of the challenges that face today’s technology, marketing, and operational leadership. Marketing Machine takes a prescriptive approach to help you launch the right product, to the right audience, at the right time. Our team works as an extension of your executive management team to help each department accomplish deliverables and meet their full potential.

We focus on building. We’ve brought over 200 companies to market. We bet you’ve heard of some.

Marketing Machine plays a pivotal role in helping companies drive innovation forward,faster.